In this guide, you will learn all you need to know about PyTorch loss functions. Loss functions give your model the ability to learn, by determining where mistakes need to be corrected. In technical terms, machine learning models are optimization problems where the loss functions aim to minimize the error.

By the end of this guide, you’ll have learned the following:

- What loss functions are and how they fit into machine learning and deep learning workflows

- How loss functions are implemented in PyTorch using the

torch.nnmodule - What some common loss functions are in PyTorch and when to use which

- How to implement custom loss functions in PyTorch

- How to monitor loss functions in PyTorch to optimize your models

Table of Contents

Understanding Loss Functions for Deep Learning

In working with deep learning or machine learning problems, loss functions play a pivotal role in training your models. A loss function assesses how well a model is performing at its task and is used in combination with the PyTorch autograd functionality to help the model improve.

It can be helpful to think of our deep learning model as a student trying to learn and improve. A loss function is similar to a teacher evaluating the student’s performance, indicating where there is room for improvement.

Defining Loss Functions

A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. In plainer terms, the function is used to measure how far off a model’s predictions are from reality.

The goal of a loss function is to minimize the loss, which is a signal that our model’s predictions are getting closer and closer to the truth. Because of this, loss functions serve as the guiding force behind the training process, allowing your model to develop over time.

Understanding Loss Functions

Loss functions aren’t a one-size-fits-all. Different deep learning tasks demand different loss functions that meet the needs of different models. PyTorch provides many different loss functions, which we’ll get into shortly.

The important thing to realize is that different types of deep learning problems will require different loss functions. This is to say, that classification tasks, such as convolutional neural networks, will require different loss functions than regression problems.

Also important to understand is that loss functions are often a balancing act between precision and robustness. We’ll dive into this more in the section on monitoring loss functions. For now, it’s important to know that selecting a loss function isn’t arbitrary, but rather a strategic decision. Loss functions will emphasize different aspects of the precision quality.

Some loss functions will prioritize accuracy at the expense of sensitivity to outliers, while others provide a balance between robustness and precision. Because of this, understanding the details of loss functions is critical to achieving the desired model’s performance.

Now that you have a good understanding of what loss functions are and why they’re important, let’s start exploring how they’re implemented in PyTorch.

How Loss Functions are Implemented in PyTorch

PyTorch offers the nn module in order to streamline implementing loss functions in your PyTorch deep learning projects. The nn module provides many different neural network building blocks, as well as a wide spectrum of pre-implemented loss functions that cover many different machine learning tasks.

One of the main benefits of using the nn module is that it allows you to implement loss functions without needing to code them manually into your code. It allows your code to be easily understood and easily adapted by others.

Importing Loss Functions in PyTorch

In order to use pre-built loss functions in PyTorch, we can import the torch.nn module, which is often imported using the alias nn. Let’s start off by importing both PyTorch as well as just the neural network module. From there, let’s see how we can import a number of different loss functions.

# How to Import Loss Functions in PyTorch

import torch

import torch.nn as nn

# Example loss function imports

mse_loss = nn.MSELoss() # Mean Squared Error (L2 Loss)

mae_loss = nn.L1Loss() # Mean Absolute Error (L1 Loss)

ce_loss = nn.CrossEntropyLoss() # Cross-Entropy LossIn the code block above, we implemented three different loss functions. We instantiated the following classes, nn.MSELoss() for the mean squared error loss, nn.L1Loss() for the mean absolute error loss, and nn.CrossEntropyLoss() for the cross entropy loss.

For many of these classes, PyTorch allows you to implement customizations of these functions. Once the classes are instantiated, we can pass values into each of these loss functions.

Let’s take a look at how we can use one of these loss functions to calculate the loss of two tensors:

# Calculating MSE Loss in PyTorch

import torch

import torch.nn as nn

# Create sample values

predicted = torch.tensor([2.5, 4.8, 6.9, 9.5])

actual = torch.tensor([3.0, 5.0, 7.0, 9.0])

# Create and use criterion

criterion = nn.MSELoss()

loss = criterion(predicted, actual)

print(f'MSE Loss: {loss}')

# Returns: MSE Loss: 0.13749998807907104In the example above, we calculated the mean squared error loss using the nn.MSELoss() class. Once the function is implemented, we can pass our tensors into the function, allowing us to calculate the loss.

Now that you have a good understanding of how loss functions are implemented in PyTorch, let’s dive into exploring the most common loss functions available in the deep learning library.

Common Loss Function for Deep Learning

In this section, we’ll explore several of the most commonly used loss functions. These loss functions have different strengths and weaknesses; each of them is more adept at different tasks. We’ll dive into when to use different loss functions in the next section. For now, we’ll explore what some of the most important functions are.

The Mean Absolute Error (MAE) loss, or L1 Loss, calculates the average absolute differences between predicted values and their actual targets. This is especially useful when you want your model to be less sensitive to outliers. The formula for the L1 loss is:

L1 Loss = (1/n) * Σ|y_pred - y_actual|The Mean Squared Error (MSE) loss, or L2 Loss, is used to measure the average of squared differences between the predicted and ground truth values. Because the function squares the differences, it is much more sensitive to outliers, especially compared to the MAE loss function. The formula for L2 loss is:

L2 Loss = (1/n) * Σ(y_pred - y_actual)^2The Cross-Entropy Loss is an important loss function for classification tasks, which measures the dissimilarity between the predicted class probabilities and the actual class labels. The cross-entropy loss function incorporates the softmax activation function, which converts model outputs into probability distributions across all the different classes. This loss function encourages the model to assign high probabilities to the correct classes. The formula for cross-entropy loss is:

Cross-Entropy Loss = -Σ(y_actual * log(y_pred))Now that you have a good understanding of some of the most important loss functions in PyTorch, lets explore when you might want to use each one.

Choosing the Right Loss Function for Deep Learning

Choosing the right loss function is an important decision that significantly impacts the performance and convergence of your deep learning model. Choosing the right loss function is both an art and a science – you can use guiding principles to help make informed decisions, but you also need to understand the data that you’re working with.

Here is a step-by-step guide for choosing the right loss function for your PyTorch deep-learning project:

- Understand your problem: Begin by getting an understanding of your problem domain. For example, are you working with a regression task to predict continuous values or a classification task to predict discrete classes? Similarly, understanding whether or not you have specific requirements for precision, robustness, or handling outliers will guide your decision.

- Analyze your data: Examine the distribution and characteristics of your dataset, such as whether or not there are outliers, class imbalances or any other specific defining characteristics. If there are outliers, some loss functions, such as MAE, may be more appropriate to minimize the effect of them.

- Leverage Domain Knowledge: Leverage any domain-specific knowledge you have about the problem. Certain loss functions better align with a problem’s underlying specifics.

- Consider Model Architecture: The architecture of your model can also influence your loss function choice. Different model architectures may lend themselves better to specific loss functions. For instance, convolutional neural networks (CNNs) are commonly paired with Cross-Entropy Loss for image classification tasks.

- Prioritize Model Goals: Define the goals you want your model to achieve. Is precision more important than sensitivity to outliers? Are you willing to trade off a bit of accuracy for a more robust model? Understanding your priorities will guide you toward a loss function that aligns with these goals.

- Experiment and Monitor: Experimenting with different loss functions can provide valuable insights into which loss function works best for your specific problem. Similarly, while training your model, closely monitor its convergence and performance metrics.

The table below provides a good starting point for when to use certain loss functions:

| Loss Function | Mathematical Formulation | Use Case and When to Use It |

|---|---|---|

| L1 Loss (MAE) | L1 Loss = (1/n) * Σ | Regression tasks with the large outliers that you want to reduce the impact of |

| L2 Loss (MSE) | L2 Loss = (1/n) * Σ(y_pred – y_actual)^2 | Regression tasks with relatively well-behaved data. Use when aiming for tight fit to data points. |

| Cross-Entropy Loss | CE Loss = -Σ(y_actual * log(y_pred)) | Classification tasks with multiple classes. Utilize when predicting class probabilities for accurate classification. |

| Hinge Loss | Hinge Loss = max(0, 1 – y_actual * y_pred) | Binary classification, especially for support vector machines (SVMs). Use for correctly classifying two classes. |

| Huber Loss | Huber Loss = { (1/2) * (y_pred – y_actual)^2 for | Huber Loss is a versatile loss function suitable for regression tasks where the presence of outliers is expected but not as extreme as in cases where L1 Loss would be employed. |

| Poisson Loss | Poisson Loss = Σ(y_pred – y_actual * log(y_pred)) | Predicting count data occurrences, such as text generation. Appropriate for count-based prediction tasks. |

In the next section, we’ll explore how to implement a custom loss function in PyTorch.

Implementing Custom Loss Functions in PyTorch

In some machine learning and deep learning projects, the standard loss functions may not capture the nuances of your problems. In those cases, creating a custom loss function that is tailored the the specific needs of your requirements becomes important. Thankfully, PyTorch makes it simple to design and implement a custom loss function.

Let’s see how we can implement a familiar loss function, the mean squared error, from scratch! That way, we can worry less about the intricacies of the loss, but rather the implementation of them in PyTorch.

# Implementing a Custom Loss Function in PyTorch

import torch

import torch.nn as nn

class CustomLoss(nn.Module):

def __init__(self, custom_parameters):

super(CustomLoss, self).__init__()

self.custom_parameters = custom_parameters

def forward(self, predictions, targets):

# Implement your custom loss logic here

loss = torch.mean((predictions - targets)**2)

return lossLet’s break down what we did the code block above:

- We created a class,

CustomLoss, which subclassed thenn.Moduleclass. This base class provides the structure needed to implement our custom loss function. - Within our

__init__method, we defined some custom parameters. In this case, they don’t do anything, but they’re there to illustrate that you can define parameters to control the behavior to fine-tune out specific problems. - Implement the actual loss calculation logic within the

forwardmethod. This method takes inpredictions(the output of your model) andtargets(the ground truth values) as arguments. You can use these values to compute the loss according to your custom logic.

While the example above replicates the mean squared error loss, it can be modified to meet the requirements of your own project.

Monitoring Loss for Deep Learning Projects

Monitoring loss is a fundamental aspect of training deep learning models. It serves as a critical indicator of how well your model is learning from the data during training. Understanding how to effectively monitor loss is essential for achieving optimal model performance.

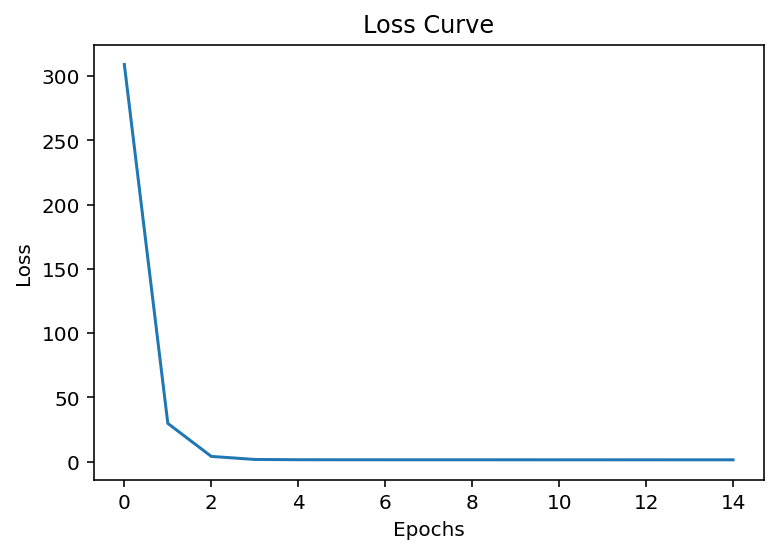

Let’s take a look at a sample deep learning workflow that allows us to track loss over time for a linear regression problem:

# Tracking Loss for Deep Learning Projects

import torch

import torch.nn as nn

import torch.optim as optim

import matplotlib.pyplot as plt

# Sample dataset

X = torch.rand(10000, 1) * 10

y = 2 * X + 1 + torch.randn(10000, 1)

# Define a simple linear regression model

class LinearRegression(nn.Module):

def __init__(self):

super(LinearRegression, self).__init__()

self.linear = nn.Linear(1, 1)

def forward(self, x):

return self.linear(x)

# Initialize the model, loss function, and optimizer

model = LinearRegression()

criterion = nn.MSELoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

# Training loop with loss tracking

num_epochs = 15

losses = []

for epoch in range(num_epochs):

# Forward pass

predictions = model(X)

# Compute the loss

loss = criterion(predictions, y)

# Backpropagation and optimization

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Append the current loss to the list

losses.append(loss.item())

# Print the loss for every 10 epochs

if (epoch + 1) % 10 == 0:

print(f'Epoch [{epoch + 1}/{num_epochs}], Loss: {loss.item()}')

# Plotting the loss curve

plt.plot(losses)

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.title('Loss Curve')

plt.show()The code above implements the regular deep learning workflow in PyTorch. The important difference is the tracking of loss. Notice that we implement losses = [], which allows us to create a list that tracks loss over time. For each epoch, we calculate the loss and append the loss to the list.

By running this code, we’re able to plot our loss over time. This results in the visualization below:

In the example above, we only tracked the training loss. It can be helpful to track both the training and validation loss to prevent your model from overfitting.

Conclusion

In this comprehensive guide, we’ve covered everything you need to know about PyTorch loss functions and how they play a crucial role in training machine learning and deep learning models. Loss functions are the compass that guides your model towards optimal performance by quantifying the disparity between its predictions and the ground truth.

Here’s a recap of what you’ve learned:

- Understanding Loss Functions: Loss functions are essential components of machine learning and deep learning workflows. They measure how well your model is performing its task and help it improve over time.

- Defining Loss Functions: We discussed the definition of loss functions, their role in minimizing errors, and how they guide model training by signaling areas for improvement.

- Common Loss Functions in PyTorch: You gained insights into commonly used loss functions in PyTorch, including L1 Loss (MAE), L2 Loss (MSE), and Cross-Entropy Loss, along with their mathematical formulations.

- Choosing the Right Loss Function: We explored the process of selecting the appropriate loss function based on problem type, data characteristics, and model goals. The choice between precision and robustness was emphasized.

- Implementing Custom Loss Functions: You learned how to create custom loss functions in PyTorch by subclassing

nn.Moduleand defining the loss calculation logic tailored to your specific needs. - Monitoring Loss for Deep Learning: Monitoring loss is critical in assessing your model’s training progress and performance. We provided sample code demonstrating how to track loss over epochs, visualize it, and use it to guide training decisions.

Loss functions are the guiding compass in the journey of training machine learning models. They evaluate, correct, and lead your model to success. Armed with this knowledge, you are better equipped to select, implement, and monitor loss functions effectively, enabling you to build robust and accurate deep learning models tailored to your specific needs.

To learn more about loss functions in PyTorch, check out the official documentation.